The Culture of Science

Scientific Ethics

Did you know?

Did you know war crimes during World War II led to the Nuremberg Code, a set of ethical principles that still guides scientific research today? Although most scientists behave ethically, cases of fraud and misconduct highlight the need for a system of ethics to ensure proper behavior and reliable research in science.

In science, as in all professions, some people try to cheat the system. Charles Dawson was one of those people – an amateur British archaeologist and paleontologist born in 1864. By the late nineteenth century, Dawson had made a number of seemingly important fossil discoveries. Not prone to modesty, he named many of his newly discovered species after himself. For example, Dawson found fossil teeth of a previously unknown species of mammal, which he subsequently named Plagiaulax dawsoni. He named one of three new species of dinosaur he found Iguanodon dawsoni and a new form of fossil plant Salaginella dawsoni. His work brought him considerable fame: He was elected a fellow of the British Geological Society and appointed to the Society of Antiquaries of London. The British Museum conferred upon him the title of Honorary Collector, and the English newspaper The Sussex Daily News dubbed him the "Wizard of Sussex."

His most famous discovery, however, came in late 1912, when Dawson showed off parts of a human-looking skull and jawbone to the public and convinced scientists that the fossils were from a new species that represented the missing link between man and ape. Dawson's "Piltdown Man," as the find came to be known, made quite an impact, confounding the scientific community for decades, long after Dawson's death in 1915. Though a few scientists doubted the find from the beginning, it was largely accepted and admired.

In 1949, Kenneth Oakley, a professor of anthropology at Oxford University, dated the skull using a newly available fluorine absorption test and found that it was 500 years old rather than 500,000. Yet even Oakley continued to believe that the skull was genuine, but simply dated incorrectly. In 1953, Joseph Weiner, a student in physical anthropology at Oxford University, attended a paleontology conference and began to realize that Piltdown Man simply did not fit with other human ancestor fossils. He communicated his suspicion to his professor at Oxford, Wilfred Edward Le Gros Clark, and they followed up with Oakley. Soon after, the three realized that the skull did not represent the missing link, but rather an elaborate fraud in which the skull of a medieval human was combined with the jawbone of an orangutan and the teeth of a fossilized chimpanzee. The bones were chemically treated to make them look older, and the teeth had even been hand filed to make them fit with the skull. In the wake of this revelation, at least 38 of Dawson's finds have been found to be fakes, created in his pursuit of fame and recognition.

Advances in science depend on the reliability of the research record, so thankfully, hucksters and cheats like Dawson are the exception rather than the norm in the scientific community. But cases like Dawson's play an important role in helping us understand the system of scientific ethics that has evolved to ensure reliability and proper behavior in science.

The Role of Ethics in Science

Ethics is a set of moral obligations that define right and wrong in our practices and decisions. Many professions have a formalized system of ethical practices that help guide professionals in the field. For example, doctors commonly take the Hippocratic Oath, which, among other things, states that doctors "do no harm" to their patients. Engineers follow an ethical guide that states that they "hold paramount the safety, health, and welfare of the public." Within these professions, as well as within science, the principles become so ingrained that practitioners rarely have to think about adhering to the ethic – it's part of the way they practice. And a breach of ethics is considered very serious, punishable at least within the profession (by revocation of a license, for example) and sometimes by the law as well.

Scientific ethics calls for honesty and integrity in all stages of scientific practice, from reporting results regardless to properly attributing collaborators. This system of ethics guides the practice of science, from data collection to publication and beyond. As in other professions, the scientific ethic is deeply integrated into the way scientists work, and they are aware that the reliability of their work and scientific knowledge in general depends upon adhering to that ethic. Many of the ethical principles in science relate to the production of unbiased scientific knowledge, which is critical when others try to build upon or extend research findings. The open publication of data, peer review, replication, and collaboration required by the scientific ethic all help to keep science moving forward by validating research findings and confirming or raising questions about results (see our module Scientific Literature for further information).

Some breaches of the ethical standards, such as fabrication of data, are dealt with by the scientific community through means similar to ethical breaches in other disciplines – removal from a job, for example. But less obvious challenges to the ethical standard occur more frequently, such as giving a scientific competitor a negative peer review. These incidents are more like parking in a no parking zone – they are against the rules and can be unfair, but they often go unpunished. Sometimes scientists simply make mistakes that may appear to be ethical breaches, such as improperly citing a source or giving a misleading reference. And like any other group that shares goals and ideals, the scientific community works together to deal with all of these incidents as best as they can – in some cases with more success than others.

Ethical standards in science

Scientists have long maintained an informal system of ethics and guidelines for conducting research, but documented ethical guidelines did not develop until the mid-twentieth century, after a series of well-publicized ethical breaches and war crimes. Scientific ethics now refers to a standard of conduct for scientists that is generally delineated into two broad categories (Bolton, 2002). First, standards of methods and process address the design, procedures, data analysis, interpretation, and reporting of research efforts. Second, standards of topics and findings address the use of human and animal subjects in research and the ethical implications of certain research findings. Together, these ethical standards help guide scientific research and ensure that research efforts (and researchers) abide by several core principles (Resnik, 1993), including:

- Honesty in reporting of scientific data;

- Careful transcription and analysis of scientific results to avoid error;

- Independent analysis and interpretation of results that is based on data and not on the influence of external sources;

- Open sharing of methods, data, and interpretations through publication and presentation;

- Sufficient validation of results through replication and collaboration with peers;

- Proper crediting of sources of information, data, and ideas;

- Moral obligations to society in general, and, in some disciplines, responsibility in weighing the rights of human and animal subjects.

Ethics of methods and process

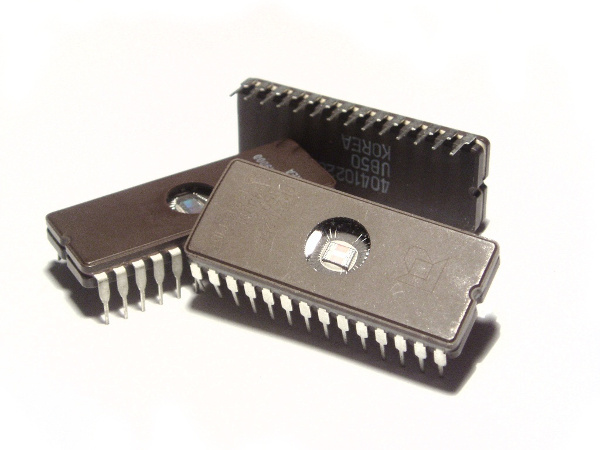

Scientists are human, and humans don't always abide by the law. Understanding some examples of scientific misconduct will help us to understand the importance and consequences of scientific integrity. In 2001, the German physicist Jan Hendrik Schön briefly rose to prominence for what appeared to be a series of breakthrough discoveries in the area of electronics and nanotechnology. Schön and two co-authors published a paper in the journal Nature, claiming to have produced a molecular-scale alternative to the transistor (Figure 2) used commonly in consumer devices (Schön et al., 2001). The implications were revolutionary – a molecular transistor could allow the development of computer microchips far smaller than any available at the time. As a result, Schön received a number of outstanding research awards and the work was deemed one of the "breakthroughs of the year" in 2001 by Science magazine.

However, problems began to appear very quickly. Scientists who tried to replicate Schön's work were unable to do so. Lydia Sohn, then a nanotechnology researcher at Princeton University, noticed that two different experiments carried out by Schön at very different temperatures and published in separate papers appeared to have identical patterns of background noise in the graphs used to present the data (Service, 2002). When confronted with the problem, Schön initially claimed that he had mistakenly submitted the same graph with two different manuscripts. However, soon after, Paul McEuen of Cornell University found the same graph in a third paper. As a result of these suspicions, Bell Laboratories, the research institution where Schön worked, launched an investigation into his research in May 2002. When the committee heading the investigation attempted to study Schön's notes and research data, they found that he kept no laboratory notebooks, had erased all of the raw data files from his computer (claiming he needed the additional storage space for new studies), and had either discarded or damaged beyond recognition all of his experimental samples. The committee eventually concluded that Schön had altered or completely fabricated data in at least 16 instances between 1998 and 2001. Schön was fired from Bell Laboratories on September 25, 2002, the same day they received the report from the investigating committee. On October 31, 2002, the journal Science retracted eight papers authored by Schön; on December 20, 2002, the journal Physical Review retracted six of Schon's papers, and on March 5, 2003, Nature retracted seven that they had published.

These actions – retractions and firing – are the means by which the scientific community deals with serious scientific misconduct. In addition, he was banned from working in science for eight years. In 2004, the University of Konstanz in Germany where Schön received his PhD, took the issue a step further and asked him to return his doctoral papers in an effort to revoke his doctoral degree. In 2014, after several appeals, the highest German court upheld the right of the university to revoke Schön's degree. At the time of the last appeal, Schön had been working in industry, not as a research scientist, and it is unlikely he will be able to find work as a research scientist again. Clearly, the consequences of scientific misconduct can be dire: complete removal from the scientific community.

The Schön incident is often cited as an example of scientific misconduct because he breached many of the core ethical principles of science. Schön admitted to falsifying data to make the evidence of the behavior he observed "more convincing." He also made extensive errors in transcribing and analyzing his data, thus violating the principles of honesty and carefulness. Schön's articles did not present his methodology in a way such that other scientists could repeat the work, and he took deliberate steps to obscure his notes and raw data and to prevent the reanalysis of his data and methods. Finally, while the committee reviewing Schön's work exonerated his coauthors of misconduct, a number of questions were raised over whether they exhibited proper oversight of the work in collaborating and co-publishing with Schön. While Schön's motives were never fully identified (he continued to claim that the instances of misconduct could be explained as simple mistakes), it has been proposed that his personal quest for recognition and glory biased his work so much that he focused on supporting specific conclusions instead of objectively analyzing the data he obtained.

Comprehension Checkpoint

The first step toward uncovering Schon's breach of ethics was when other researchers

>(A)__tried to replicate Schon's work.

(B)__found an error in Schon's original lab notes.

Ethics of topics and findings

Despite his egregious breach of scientific ethics, no criminal charges were ever filed against Schön. In other cases, actions that breach the scientific ethic also breach more fundamental moral and legal standards. One instance in particular, the brutality of Nazi scientists in World War II, was so severe and discriminatory that it led to the adoption of an international code governing research ethics.

During World War II, Nazi scientists launched a series of studies: some designed to test the limits of human exposure to the elements in the name of preparing German soldiers fighting the war. Notorious among these efforts were experiments on the effects of hypothermia in humans. During these experiments, concentration camp prisoners were forced to sit in ice water or were left naked outdoors in freezing temperatures for hours at a time. Many victims were left to freeze to death slowly while others were eventually re-warmed with blankets or warm water, or other methods that left them with permanent injuries.

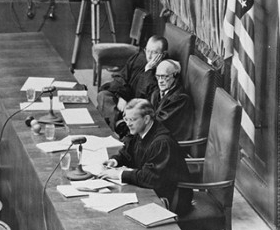

At the end of the war, 23 individuals were tried for war crimes in Nuremberg, Germany, in relation to these studies, and 15 were found guilty (Figure 3). The court proceedings led to a set of guidelines, referred to as the Nuremberg Code, which limits research on human subjects. Among other things, the Nuremberg Code requires that individuals be informed of and consent to the research being conducted; the first standard reads, "The voluntary consent of the human subject is absolutely essential." The code also states that the research risks should be weighed in light of the potential benefits, and it requires that scientists avoid intentionally inflicting physical or mental suffering for research purposes. Importantly, the code also places the responsibility for adhering to the code on "each individual who initiates, directs or engages in the experiment." This is a critical component of the code that implicates every single scientist involved in an experiment – not just the most senior scientist or first author on a paper. The Nuremberg Code was published in 1949 and is still a fundamental document guiding ethical behavior in research on human subjects that has been supplemented by additional guidelines and standards in most countries.

Other ethical principles also guide the practice of research on human subjects. For example, a number of government funding sources limit or exclude funding for human cloning due to the ethical questions raised by the practice. Another set of ethical guidelines covers studies involving therapeutic drugs and devices. Research investigating the therapeutic properties of medical devices or drugs is stopped ahead of schedule if a treatment is found to have severe negative side effects. Similarly, large-scale therapeutic studies in which a drug or agent is found to be highly beneficial may be concluded early so that the control patients (those not receiving the effective drug or agent) can be given the new, beneficial treatment.

Comprehension Checkpoint

The Nuremberg Code holds____ responsible for protecting human subjects.

(A)__the lead scientist on an experiment

(B)__every scientist involved in an experiment

Mistakes versus misconduct

Scientists are fallible and make mistakes – these do not qualify as misconduct. Sometimes, however, the line between mistake and misconduct is not clear. For example, in the late 1980s, a number of research groups were investigating the hypothesis that deuterium atoms could be forced to fuse together at room temperature, releasing tremendous amounts of energy in the process. Nuclear fusion was not a new topic in 1980, but researchers at the time were able to initiate fusion reactions only at very high temperatures, so low temperature fusion held great promise as an energy source.

Two scientists at the University of Utah, Stanley Pons and Martin Fleischmann, were among those researching the topic, and they had constructed a system using a palladium electrode and deuterated water to investigate the potential for low temperature fusion reactions. As they worked with their system, they noted excess amounts of heat being generated. Though not all of the data they collected was conclusive, they proposed that the heat was evidence for fusion occurring in their system. Rather than repeat and publish their work so that others could confirm the results, Pons and Fleischmann were worried that another scientist might announce similar results soon and hoped to patent their invention, so they rushed to publicly announce their breakthrough. On March 23, 1989, Pons and Fleischmann, with the support of their university, held a press conference to announce their discovery of "an inexhaustible source of energy."

The announcement of Pons' and Fleischmann's "cold fusion" reactor (Figure 4) caused immediate excitement in the press and was covered by major national and international news organizations. Among scientists, their announcement was simultaneously hailed and criticized. On April 12, Pons received a standing ovation from about 7,000 chemists at the semi-annual meeting of the American Chemical Society. But many scientists chastised the researchers for announcing their discovery in the popular press rather than through the peer-reviewed literature. Pons and Fleischmann eventually did publish their findings in a scientific article (Fleischmann et al., 1990), but problems had already begun to appear. The researchers had a difficult time showing evidence for the production of neutrons by their system, a characteristic that would have confirmed the occurrence of fusion reactions. On May 1, 1989, at a dramatic meeting of the American Physical Society less than five weeks after the press conference in Utah, Steven Koonin, Nathan Lewis, and Charles Barnes from Caltech announced that they had replicated Pons and Fleischmann's experimental conditions, found numerous errors in the scientists' conclusions, and further announced that they found no evidence for fusion occurring in the system. Soon after that, the US Department of Energy published a report that stated "the experimental results ...reported to date do not present convincing evidence that useful sources of energy will result from the phenomena attributed to cold fusion."

While the conclusions made by Pons and Fleischmann were discredited, the scientists were not accused of fraud – they had not fabricated results or attempted to mislead other scientists, but had made their findings public through unconventional means before going through the process of peer review. They eventually left the University of Utah to work as scientists in the industrial sector. Their mistakes, however, not only affected them but discredited the whole community of legitimate researchers investigating cold fusion. The phrase "cold fusion" became synonymous with junk science, and federal funding in the field almost completely vanished overnight. It took almost 15 years of legitimate research and the renaming of their field from cold fusion to "low energy nuclear reactions" before the US Department of Energy again considered funding well-designed experiments in the field (DOE SC, 2004).

Comprehension Checkpoint

When faulty research results from mistakes rather than deliberate fraud,

(A)__the scientists responsible must pay a fine.

(B)__it can still affect the larger scientific community.

Everyday ethical decisions

Scientists also face ethical decisions in more common ways and everyday circumstances. For example, authorship on research papers can raise questions. Authors on papers are expected to have materially contributed to the work in some way and have a responsibility to be familiar with and provide oversight of the work. Jan Hendrik Schön's coauthors clearly failed in this responsibility. Sometimes newcomers to a field will seek to add experienced scientists' names to papers or to grant proposals to increase the perceived importance of their work. While this can lead to valuable collaborations in science, if those senior authors simply accept "honorary" authorship and do not contribute to the work, it raises ethical issues over responsibility in research publishing.

A scientist's source of funding can also potentially bias their work. While scientists generally acknowledge their funding sources in their papers, there have been a number of cases in which lack of adequate disclosure has raised concern. For example, in 2006 Dr. Claudia Henschke, a radiologist at the Weill Cornell Medical College, published a paper that suggested that screening smokers and former smokers with CT chest scans could dramatically reduce the number of lung cancer deaths (Henschke et al., 2006). However, Henschke failed to disclose that the foundation through which her research was funded was itself almost wholly funded by Liggett Tobacco. The case caused an outcry in the scientific community because of the potential bias toward trivializing the impact of lung cancer. Almost two years later, Dr. Henschke published a correction in the journal that provided disclosure of the funding sources of the study (Henschke, 2008). As a result of this and other cases, many journals instituted stricter requirements regarding disclosure of funding sources for published research.

Enforcing ethical standards

A number of incidents have prompted the development of clear and legally enforceable ethical standards in science. For example, in 1932, the US Public Health Service located in Tuskegee, Alabama, initiated a study of the effects of syphilis in men. When the study began, medical treatments available for syphilis were highly toxic and of questionable effectiveness. Thus, the study sought to determine if patients with syphilis were better off receiving those dangerous treatments or not. The researchers recruited 399 black men who had syphilis, and 201 men without syphilis (as a control). Individuals enrolled in what eventually became known as the Tuskegee Syphilis Study were not asked to give their consent and were not informed of their diagnosis; instead they were told they had "bad blood" and could receive free medical treatment (which often consisted of nothing), rides to the clinic, meals, and burial insurance in case of death in return for participating.

By 1947, penicillin appeared to be an effective treatment for syphilis. However, rather than treat the infected participants with penicillin and close the study, the Tuskegee researchers withheld penicillin and information about the drug in the name of studying how syphilis spreads and kills its victims. The unconscionable study continued until 1972, when a leak to the press resulted in a public outcry and its termination. By that time, however, 28 of the original participants had died of syphilis and another 100 had died from medical complications related to syphilis. Further, 40 wives of participants had been infected with syphilis, and 19 children had contracted the disease at birth.

As a result of the Tuskegee Syphilis Study and the Nuremberg Doctors' trial, the United States Congress passed the National Research Act in 1974. The Act created the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research to oversee and regulate the use of human experimentation and defined the requirements for Institutional Review Boards (IRBs). As a result, all institutions that receive federal research funding must establish and maintain an IRB, an independent board of trained researchers who review research plans that involve human subjects to assure that ethical standards are maintained. An institution's IRB must approve any research with human subjects before it is initiated. Regulations governing the operation of the IRB are issued by the US Department of Health and Human Services.

Equally important, individual scientists enforce ethical standards in the profession by promoting open publication and presentation of methods and results that allow for other scientists to reproduce and validate their work and findings. Federal government-based organizations like the National Academy of Sciences publish ethical guidelines for individuals. An example is the book On Being a Scientist, which can be accessed via the Resources section (National Academy of Sciences, 1995). The US Office of Research Integrity also promotes ethics in research by monitoring institutional investigations of research misconduct and promoting education on the issue.

Ethics in science are similar to ethics in our broader society: They promote reasonable conduct and effective cooperation between individuals. While breaches of scientific ethics do occur, as they do in society in general, they are generally dealt with swiftly when identified and help us to understand the importance of ethical behavior in our professional practices. Adhering to the scientific ethic assures that data collected during research are reliable and that interpretations are reasonable and with merit, thus allowing the work of a scientist to become part of the growing body of scientific knowledge.

Summary

Ethical standards are a critical part of scientific research. Through examples of scientific fraud, misconduct, and mistakes, this module makes clear how ethical standards help ensure the reliability of research results and the safety of research subjects. The importance and consequences of integrity in the process of science are examined in detail.

Key Concepts

- Ethical conduct in science assures the reliability of research results and the safety of research subjects.

- Ethics in science include: a) standards of methods and process that address research design, procedures, data analysis, interpretation, and reporting; and b) standards of topics and findings that address the use of human and animal subjects in research.

- Replication, collaboration, and peer review all help to minimize ethical breaches, and identify them when they do occur.

QUIZ

- Scientific ethics are commonly descibed as covering which of the following two categories?

- The research papers published by Jan Hndrik Schon were eventually retracted because he had been found to have

- The Nuremberg Code

- Unlike misconduct, mistakes never get discovered in science.

- The majority of scientists behave ethically.

- Reporting sources of funding of scientific research is important because

- Federal law in the United States requires that institutions that receive funding from the government maintain a(n) to review all research involving human subjects before it is initiated.

- In addition to assuring the safety of human subjects, scientific ethics help assure

(A)__Standards for individuals, and standards for the entire research community.

(B)__Research ethics, and medical ethics.

(C)__Standards of methods and process, and standards of topics.

(D)__Standards of data collection, and standards of publishing.

(A)__inappropriatedly profited from his research.

(B)__submitted his work to the wrong journal.

(C)__fabricated much of his data.

(D)__conducted additional research that made him question his original findings.

(A)__was developed in response to war crimes by Nazi scientists.

(B)__requires that individuals willingly consent to any research conducted on them.

(C)__requires that scientists avoid intentionally inflicting harm.

(D)__All of the other choices are correct.

(A)__true

(B)__false

(A)__of the potential bias that funding sources may exert on research reports.

(B)__it allows other scientists to fairly compete for funding from those same sources.

(C)__it reveals whether or not the research is for profit.

(D)__it reveals whether or not the scientists are paid or volunteers.

(A)__Human Subject Council

(B)__Research Advisory Board

(C)__Safety in Research Office

(D)__Institutional Review Board

(A)__that all scientists report their findings to the federal government.

(B)__that mistakes are never made in science.

(C)__that scientific laboratories are safe workplaces.